On Thursday, Box kicked off its Boxworks developer conference, announcing a new set of AI features, building agent-based AI models into the backbone of the company’s products.

It’s more product announcements than usual at the conference, reflecting the increasingly rapid pace of AI development at the company: Box launched its AI studio last year, followed by a new set of data-forwarding agents in February and others for search and deep learning in May .

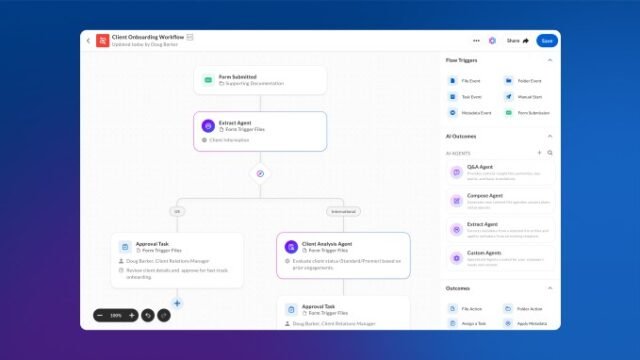

Now the company is rolling out a new system called Box Automates. It acts as a kind of operating system for AI agents, breaking down workflows into different segments that can be augmented with AI as needed.

I spoke with CEO Aaron Levy about the company’s approach to AI and the dangerous work that competes with foundation-based models. Unsurprisingly, he was very bullish on the potential of AI agents in the modern workplace, but he was also clear about the limitations of current models and how to manage those limitations with existing technology.

This interview has been edited for length and clarity.

You’re announcing a bunch of AI products today, so I want to start by asking about the big picture vision. Why build AI agents into a cloud content management service?

So the thing that we think about all day long – and what we’re focused on at Box – is how much work is changing because of AI. And the vast majority of the impact right now is on workflows that involve unstructured data. We’ve already been able to automate everything that deals with structured data that goes into a database. If you think about CRM systems, ERP systems, HR systems, we’ve already had years of automation in that space. But where we’ve never had automation is something that touches unstructured data.

TechCrunch event

San Francisco

|

October 27-29, 2025

Think about any kind of legal review process, any kind of marketing asset management process, any kind of merger and acquisition deal review – all of these workflows deal with a lot of unstructured data. People have to review this data, make updates, make decisions, and so on. We’ve never been able to bring much automation to these workflows. We’ve been able to describe them in software, but computers just haven’t been good enough at reading a document or looking at a marketing asset.

So for us, AI agents mean that for the first time, we can actually use all this unstructured data.

What about the risks of deploying agents in a business context? Some of your customers must be nervous about deploying something like this on sensitive data.

What we’ve seen from clients is that they want to know that every time they run this workflow, the agent is going to execute more or less the same way, at the same point in the workflow, and they won’t have things going off the rails. You don’t want an agent to make some compounding mistake, where after they’ve done the first couple of 100 submissions, they start running wild.

It becomes really important to have the right demarcation points where the agent starts and the rest of the system ends. Every workflow has this question of what should be deterministic guardrails, and what can be fully agentic and non-deterministic.

Tas, ko jūs varat darīt ar kastes automatizāciju, ir izlemt, cik daudz darba vēlaties katram atsevišķam aģentam darīt, pirms tas nodod citu aģentu. Tātad jums varētu būt iesniegšanas aģents, kas ir nodalīts no pārskata aģenta utt. Tas ļauj jums būtībā izvietot AI aģentus mērogā jebkura veida darbplūsmā vai biznesa procesā organizācijā.

Kādas problēmas jūs aizsargājat, sadalot darbplūsmu?

Mēs jau esam redzējuši dažus ierobežojumus pat vismodernākajās pilnībā aģentu sistēmās, piemēram, Claude Code. Kādā uzdevuma brīdī modelim beidzas konteksta loga telpas, lai turpinātu pieņemt labus lēmumus. Pašlaik AI nav bezmaksas pusdienas. Pēc jebkura jūsu biznesa uzdevuma jums nevar būt tikai ilgstošs aģents ar neierobežotu konteksta logu. Tātad jums ir jāizjauc darbplūsma un jāizmanto SBAGENTS.

Es domāju, ka mēs atrodamies konteksta laikmetā AI ietvaros. Tas, kas AI modeļiem un aģentiem ir nepieciešams, ir konteksts, un konteksts, kas viņiem nepieciešams, ir sēdēt jūsu nestrukturētos datos. Tātad visa mūsu sistēma ir patiešām paredzēta, lai noskaidrotu, kādu kontekstu jūs varat dot AI aģentam, lai nodrošinātu, ka tie darbojas pēc iespējas efektīvāk.

Rūpniecībā notiek lielākas debates par lielo, spēcīgo robežas modeļu priekšrocībām, salīdzinot ar modeļiem, kas ir mazāki un uzticamāki. Vai tas liek jums uz mazāku modeļu pusi?

Man droši vien vajadzētu paskaidrot: nekas par mūsu sistēmu neļauj uzdevumam būt patvaļīgi ilgam vai sarežģītam. Tas, ko mēs cenšamies darīt, ir izveidot pareizās aizsargmargas, lai jūs varētu izlemt, cik aģents vēlaties būt šis uzdevums.

Mums nav īpašas filozofijas par to, kur cilvēkiem vajadzētu atrasties šajā kontinuumā. Mēs tikai cenšamies noformēt nākotni drošu arhitektūru. Mēs to esam izstrādājuši tādā veidā, ka, uzlabojoties modeļiem un, uzlabojoties aģentiskām iespējām, jūs vienkārši iegūsit visas šīs priekšrocības tieši mūsu platformā.

Otra problēma ir datu kontrole. Tā kā modeļi ir apmācīti tik daudz datu, pastāv patiesas bailes, ka sensitīvie dati tiks regurgitēti vai nepareizi izmantoti. Kā tas faktiski ietekmē?

Tas ir, kur daudz AI izvietošanas noiet greizi. Cilvēki domā: “Ei, tas ir viegli. Es sniegšu piekļuvi AI modelim visiem maniem nestrukturētajiem datiem, un tas atbildēs uz jautājumiem cilvēkiem.” Un tad tas sāk sniegt atbildes uz datiem, kuriem jums nav piekļuves, vai arī jums nevajadzētu būt piekļuvei. Jums ir nepieciešams ļoti jaudīgs slānis, kas apstrādā piekļuves kontroli, datu drošību, atļaujas, datu pārvaldību, atbilstību, visu.

So we’re benefiting from a couple of decades that we’ve spent building a system that basically solves this exact problem: How do you ensure that only the right person has access to each piece of data in a company? So when an agent answers a question, you know deterministically that it can’t get any data that that person shouldn’t have access to. It’s just something fundamentally built into our system.

Earlier this week, Anthropic released a new feature to upload files directly to Claude.ai. It’s a long way from the kind of file management it does, but you have to think about potential competition from foundation model companies. How are you approaching this strategically?

So if you think about what enterprises need when they deploy AI at scale, they need security, permissions, and control. They need a user interface, they need powerful APIs, they want to choose AI models because one day one AI model provides some example for them that is better than another, but then that could change, and they don’t want to be locked into one particular platform.

So what we’ve built is a system that effectively enables all of these capabilities. We do storage, security, permissions, vector embedding, and we connect to every leading AI model out there.